Some Experiments into How Google's Crawler works

Why experiment with Googlebot Beyond the fact it is interesting to understand how it works, it is potential useful if you ca...

Expert insights, guides, and tips to improve your website's SEO performance

These files are used by search engines and bots to discover content and to learn what you do and do not want them visiting. robots.txt holds a series of rules that you are requesting the bot to follow about where it can and can't go. sitemap.xml is a list of pages you would like bots to crawl. llms.txt is a new idea that makes it easier for AI to find information.

Knowing when and how often bots are visiting these files can give you interesting and useful information about bot activity on your site. You could parse your web server logs for these but it's often complex. Most modern web analytics software, like Google Analytics, use javascript code embedded into html pages - but as txt and xml files can't contain javascript this method completely ignores them.

Cloudflare provides an edge caching infrastructure to speed up your site. This means they store parts of your site that change less closer to end users around the world. They do this by proxying your site. This means if a user requests something from your site then if Cloudflare has it in its cache then it provides that, if not then it asks your site for it and returns that (and likely puts it in its cache depending on a set of rules). This speeds up your site. Essentially Cloudflare sits in the middle between your site and the user, looking for ways to speed it up.

Because of this and the fact Cloudflare has a free offering, a lot of sites are on Cloudflare.

Cloudflare released a product called workers. It's free for 100,000 requests a day. The product can be used many ways but one of those ways is that if the site is proxied through cloudflare then we can run a bit of code when somebody visits a url. In our case that means we can perform tracking on /robots.txt, /sitemap.xml, and /llms.txt but running a bit of code that simply gets the original file and returns it and logs the request to a database.

Cloudflare provide a KV database for this that is free for 100,000 reads and 1,000 writes per day. What matters here is the writes as when we log data we use writes. So for free you can record 1000 visits a day to the 3 files, to be honest if you have more than that you have a serious bot problem!

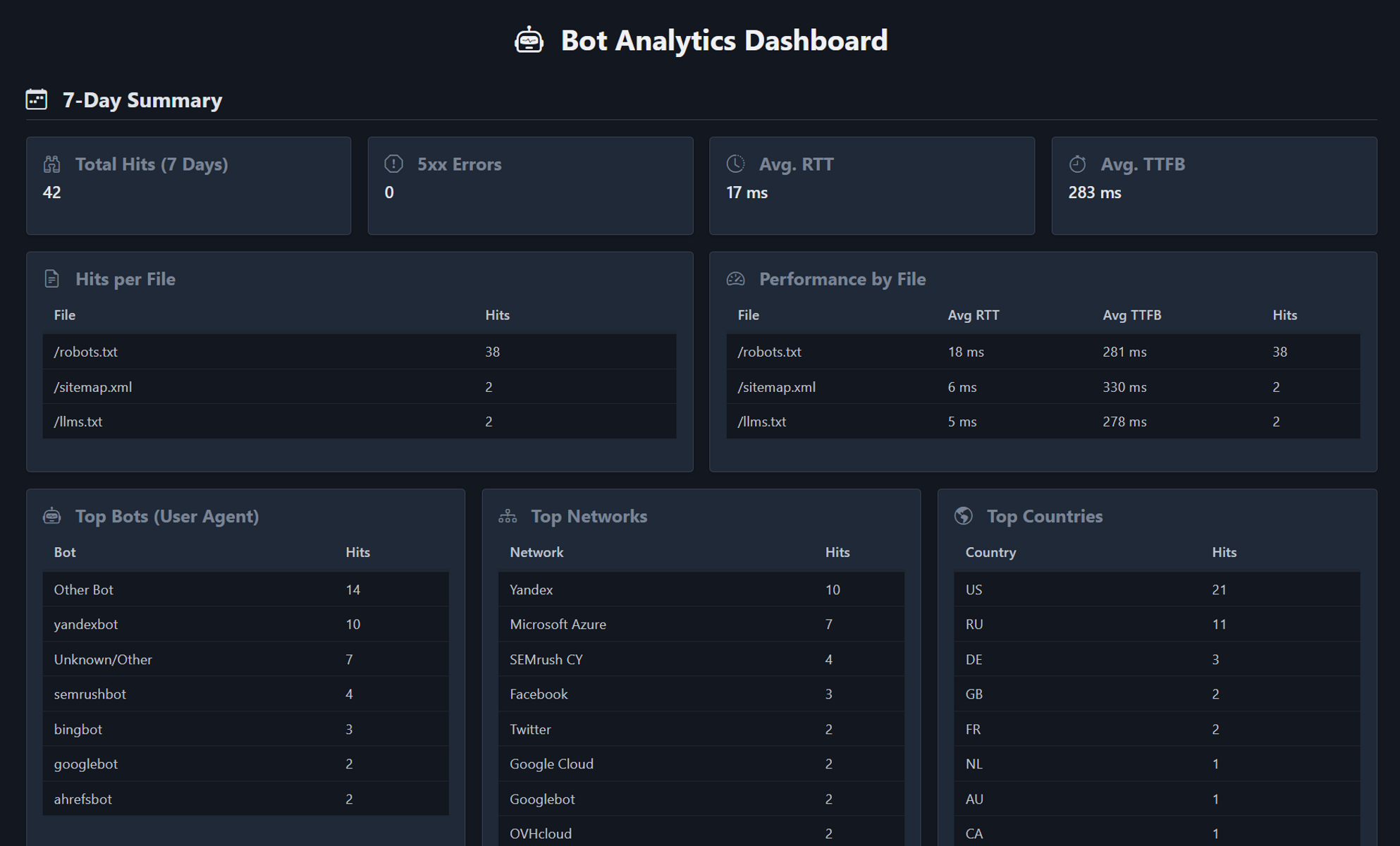

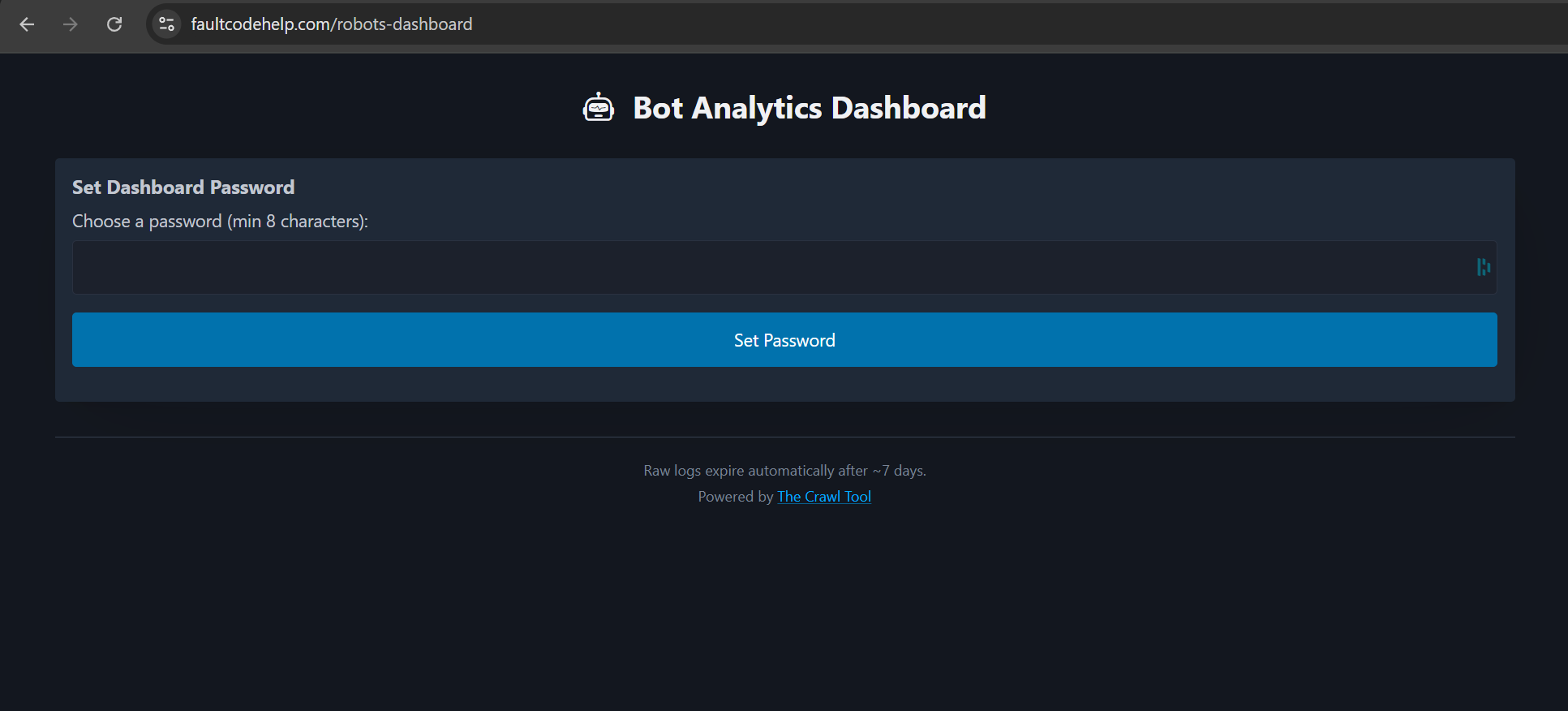

Using this we created code that puts a /robots-dashboard url on your site where you can see visits to /robots.txt, /sitemap.xml, and /llms.txt . We put that behind a password.

We're giving this script away for free so you can put it on your Cloudflare proxied site!

Cloudflare build amazing technical products, but if I had one complaint it's that the UI and describing their products isn't exactly clear. Still, I've picked the easiest installation method so if you managed to setup Cloudflare to start with then you should be okay. There are a few steps though.

Firstly you'll want to navigate to the longest url known to man, luckily I can put it here as a hyperlink:

Robots Dashboard on Cloudflare Playground

I believe it doesn't work on Safari, so you need Chrome or Firefox or a pseudo Chrome like Edge.

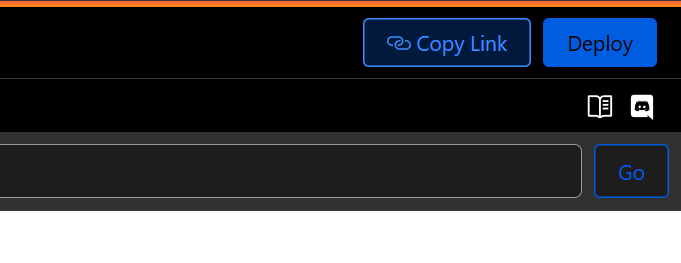

On the playground you will see code. It's there for you to check if you want to. But eventually you'll want to hit the Deploy button at the top right.

It's basically "Install" (see what I mean about UI clarity!).

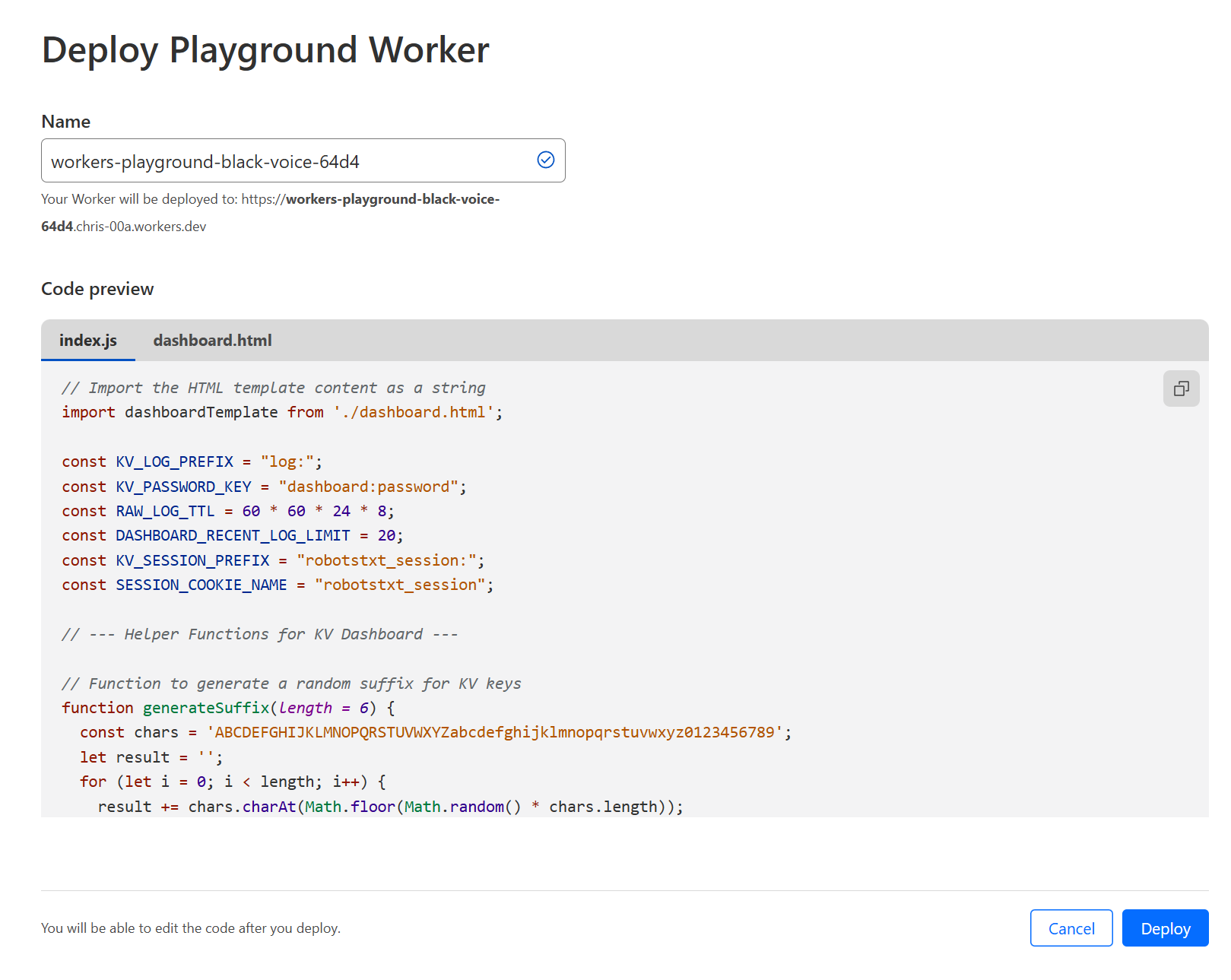

You might need to log in to Cloudflare, but you'll come to a page like this

Change the name to something reasonable - like robots-dashboard and click on the next Deploy button.

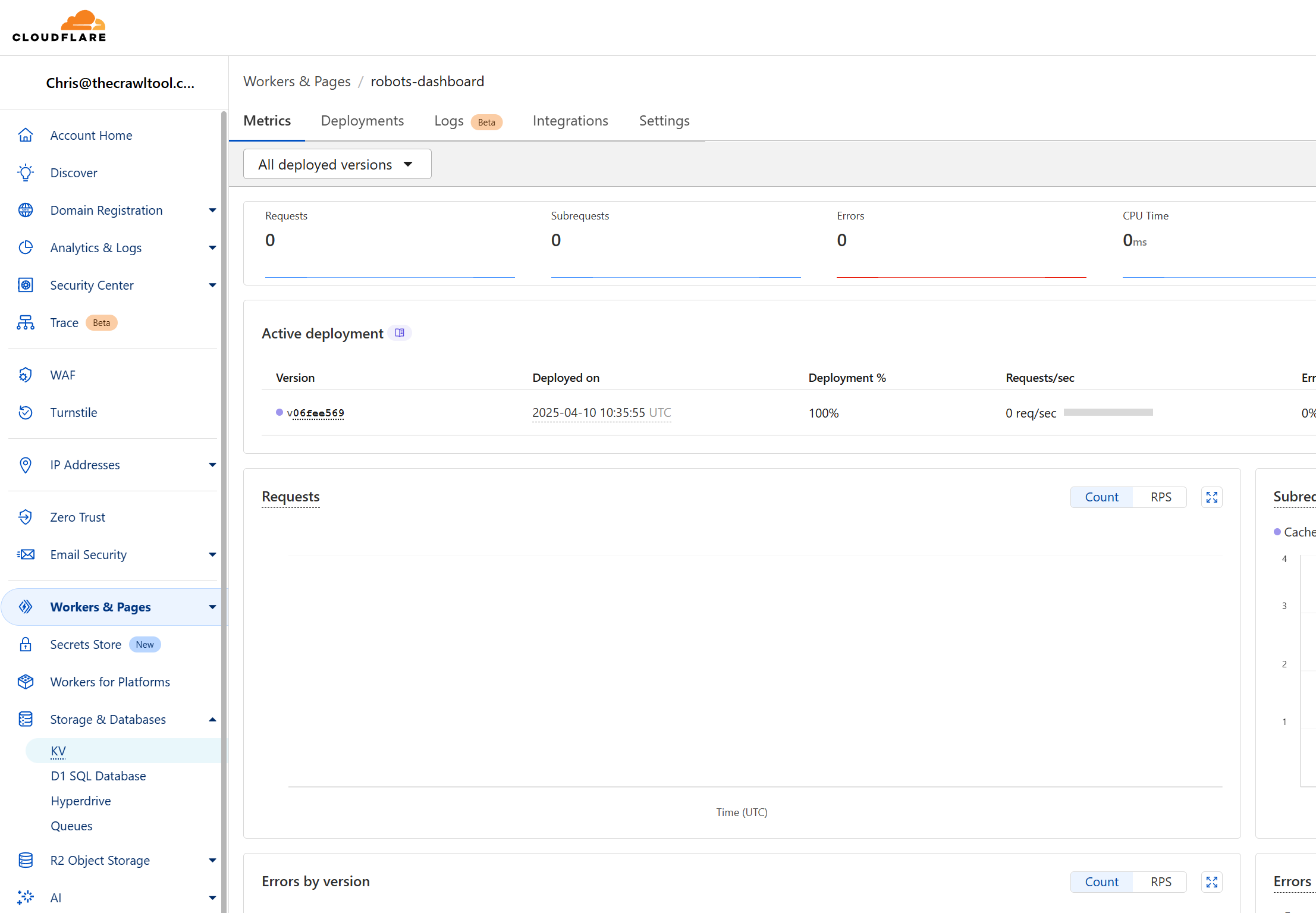

You'll end up in a screen like this and wondering whether this is really as easy as I described.

But don't worry. Expand "Storage & Databases" on the left menu and choose "KV".

This bit is so easy I won't even give screenshots! Click "Create", fill in a name like bot_logs and click add.

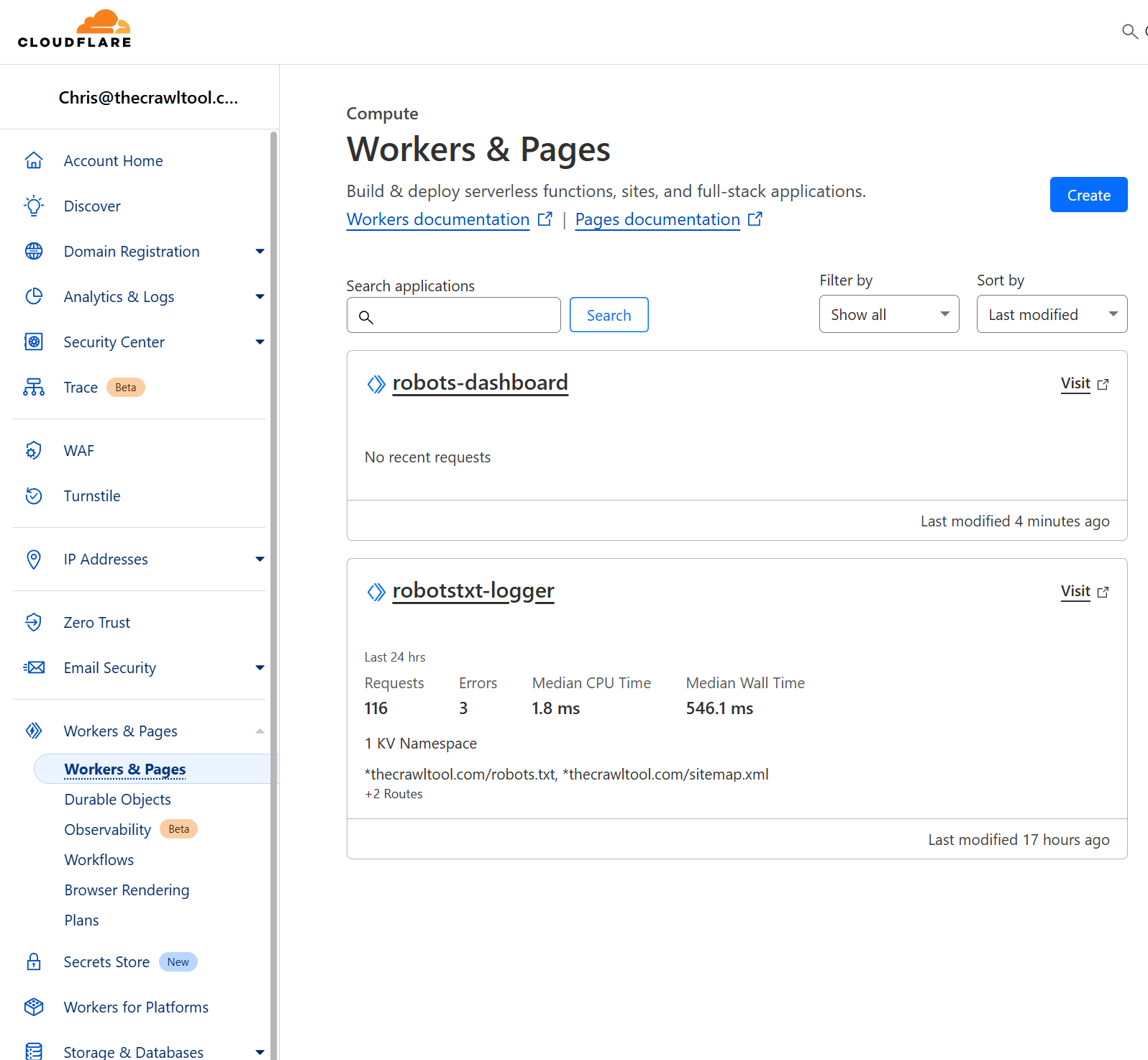

Expand "Workers & Pages" on the left menu, and Click "Workers & Pages" and you get something like this:

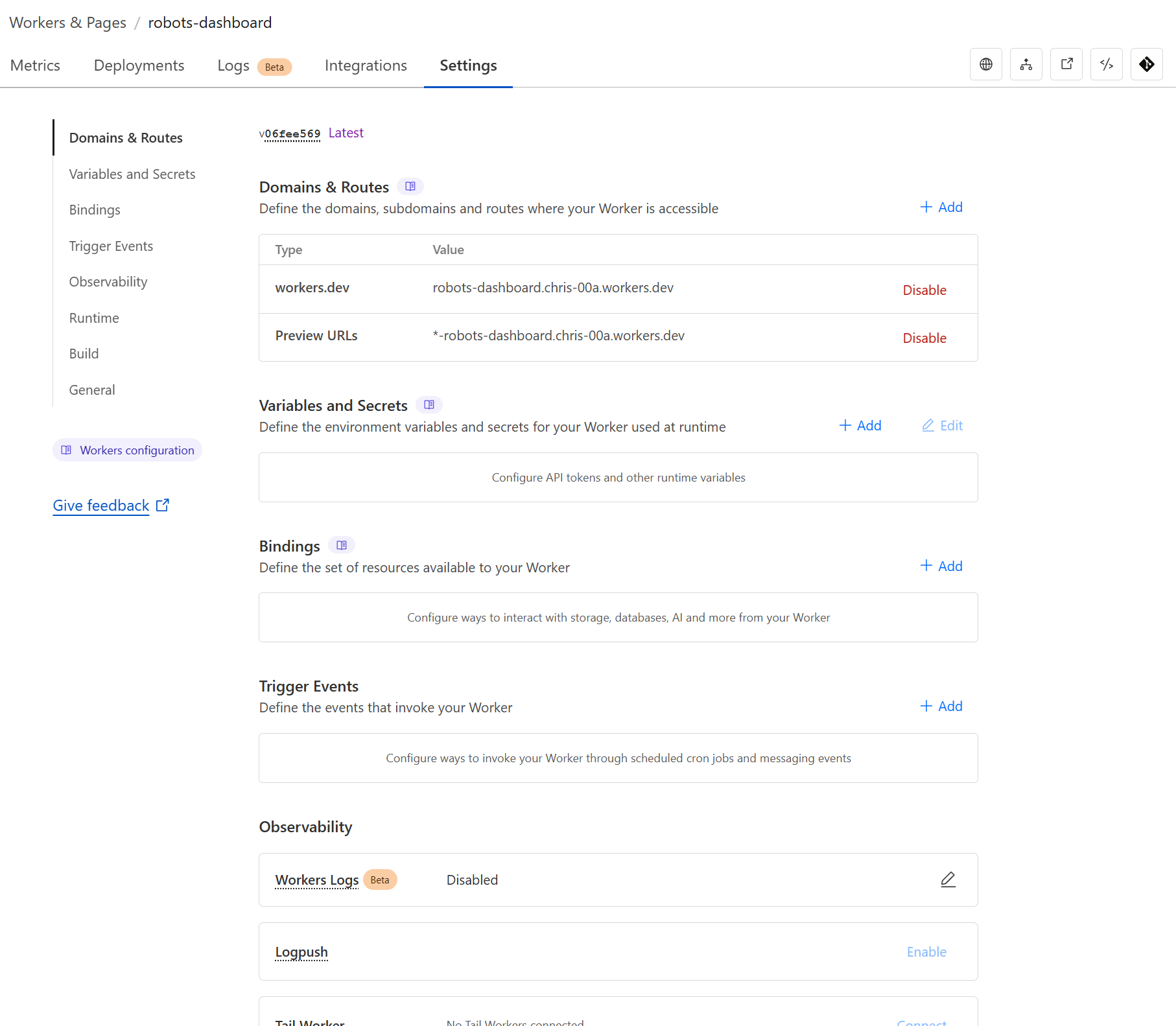

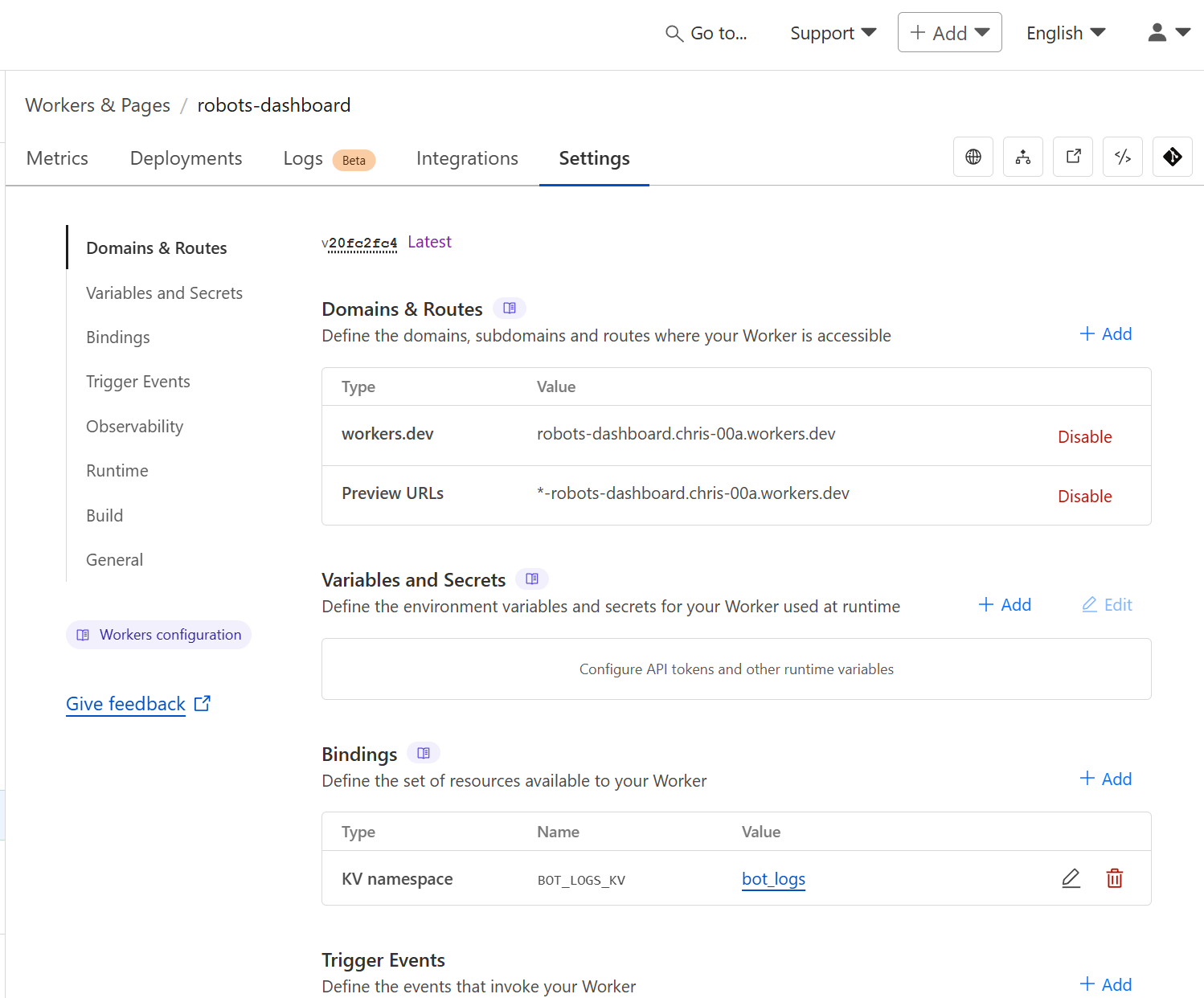

Here you can see the "robots-dashboard" worker we set up with the two "Deploy" buttons. Mine has two because I already have it running and for demonstration purposes I'm setting it up also on a test site. Click on your worker "robots-dashboard", Choose "Settings" from the top menu and you'll get something like this:

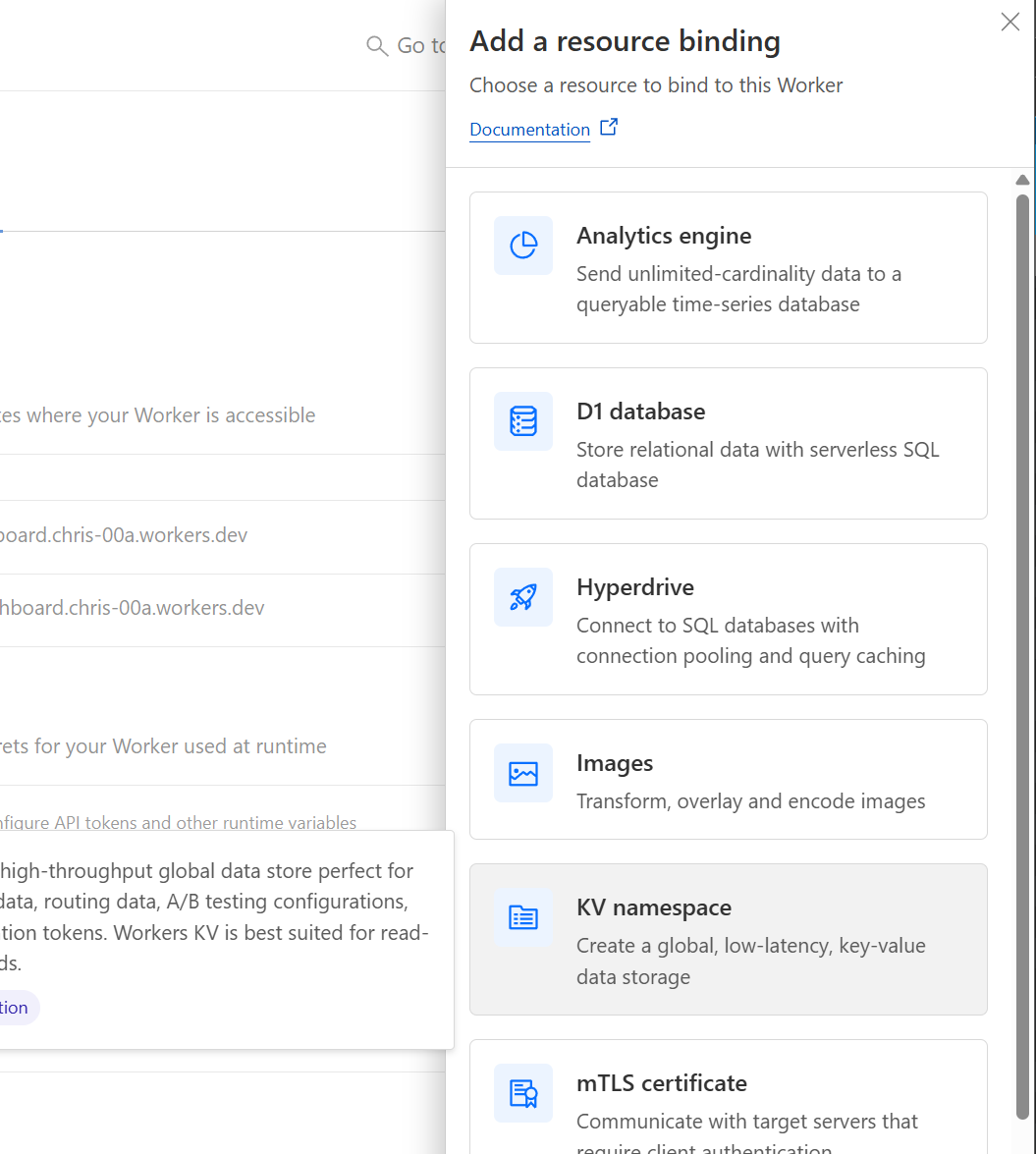

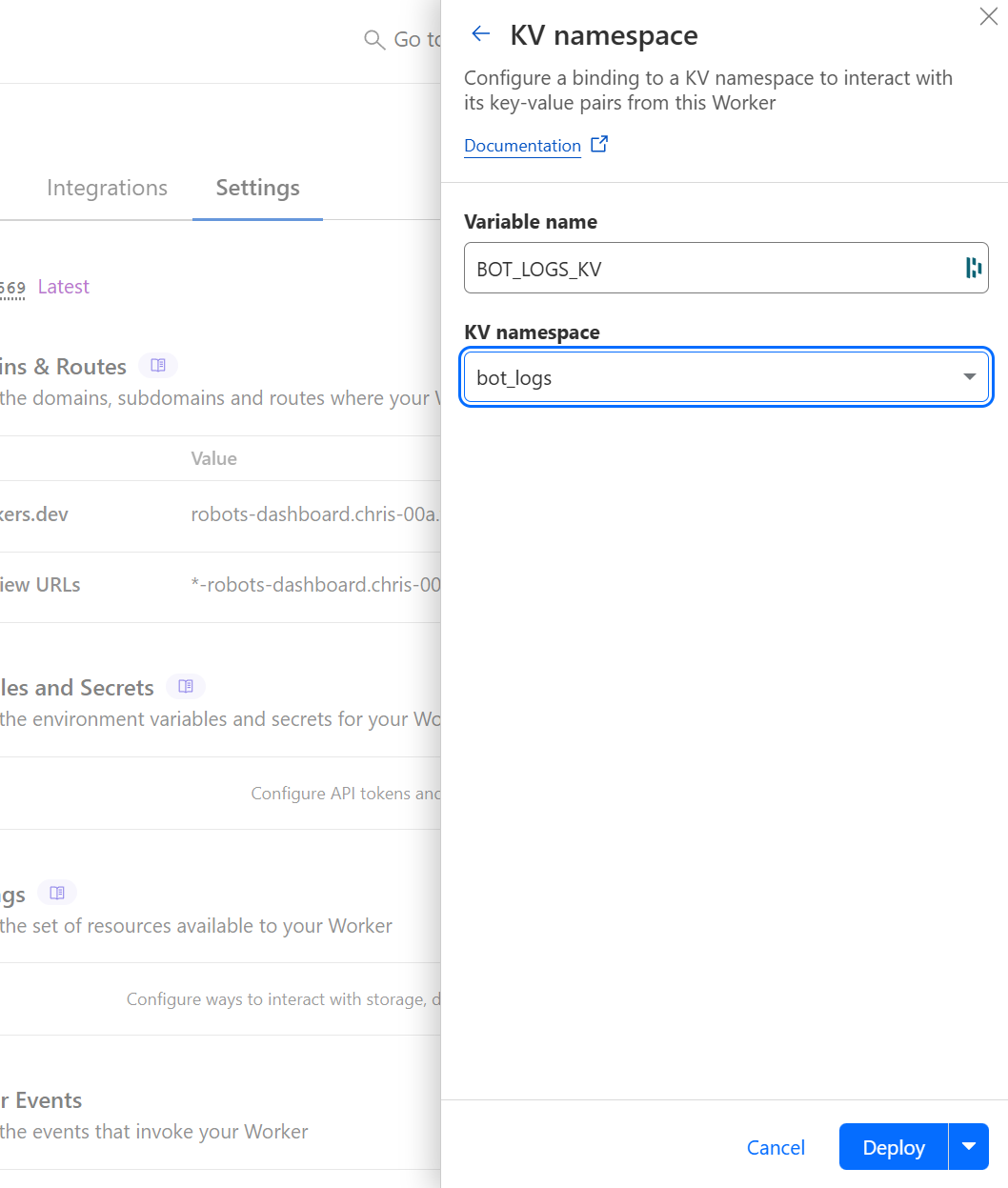

We've set up the code and the database, but we haven't told everything how it should work yet. This lets us do that. Firstly we've connect up the database. On bindings you want to hit the "+ Add" button. Choose "KV Namespace"

Make sure you put BOT_LOGS_KV in the variable name, and select the bot_logs database we created earlier and hit Deploy.

Now let's tackle the urls that trigger out code.

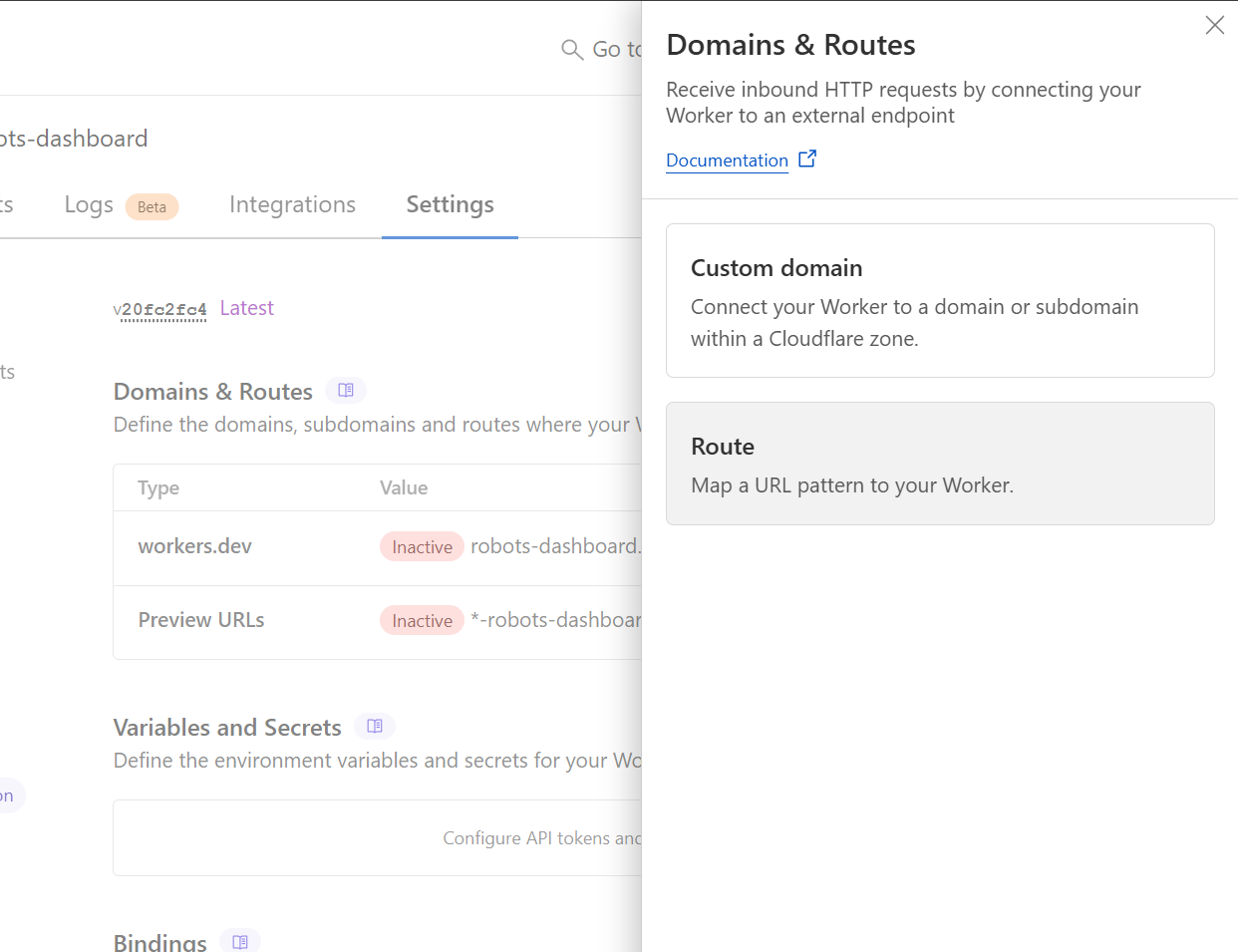

In the Domains and Routes section you can see two default options for "workers.dev" and "Preview URLs". We don't want those so for both of them hit the Disable button and click "Disable" again to confirm.

Now Click "+ Add" in the Domains & Routes section and choose "Route"

We want to first create a route that displays the dashboard.

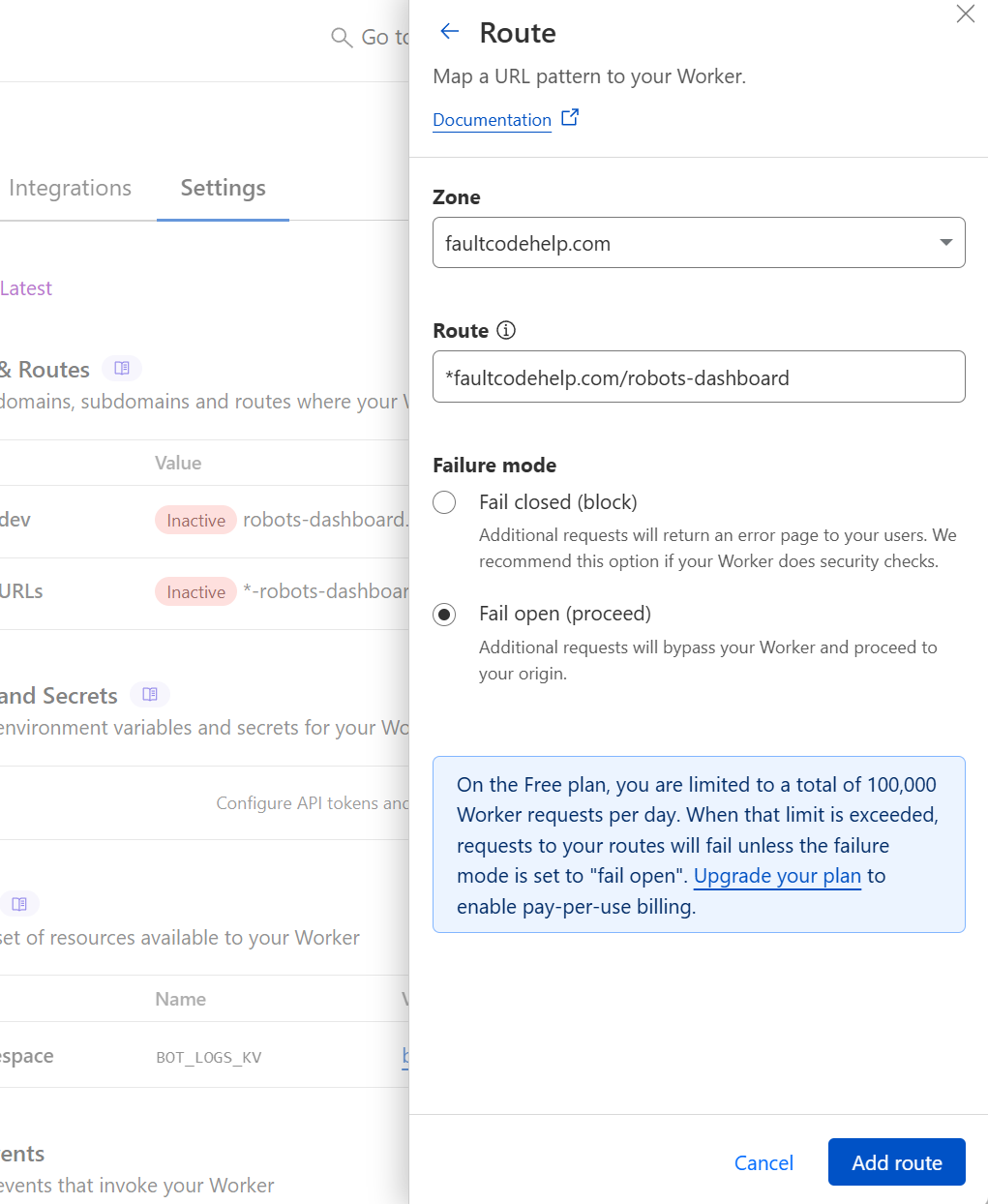

As shown above choose your domain in the Zone. In Route type in *domain.com/robots-dashboard where you are replacing domain.com with your site's website domain (don't include "www" and be sure there is no dot between the * and the domain name).

If you are on the free plan you will see "Failure mode" - choose "Fail open (proceed)". This tells Cloudflare that if you've used all your free quota up then it should just go to the original site. This isn't so important for this route but for the other ones it will ensure your robots.txt availability for example. Click "Add route".

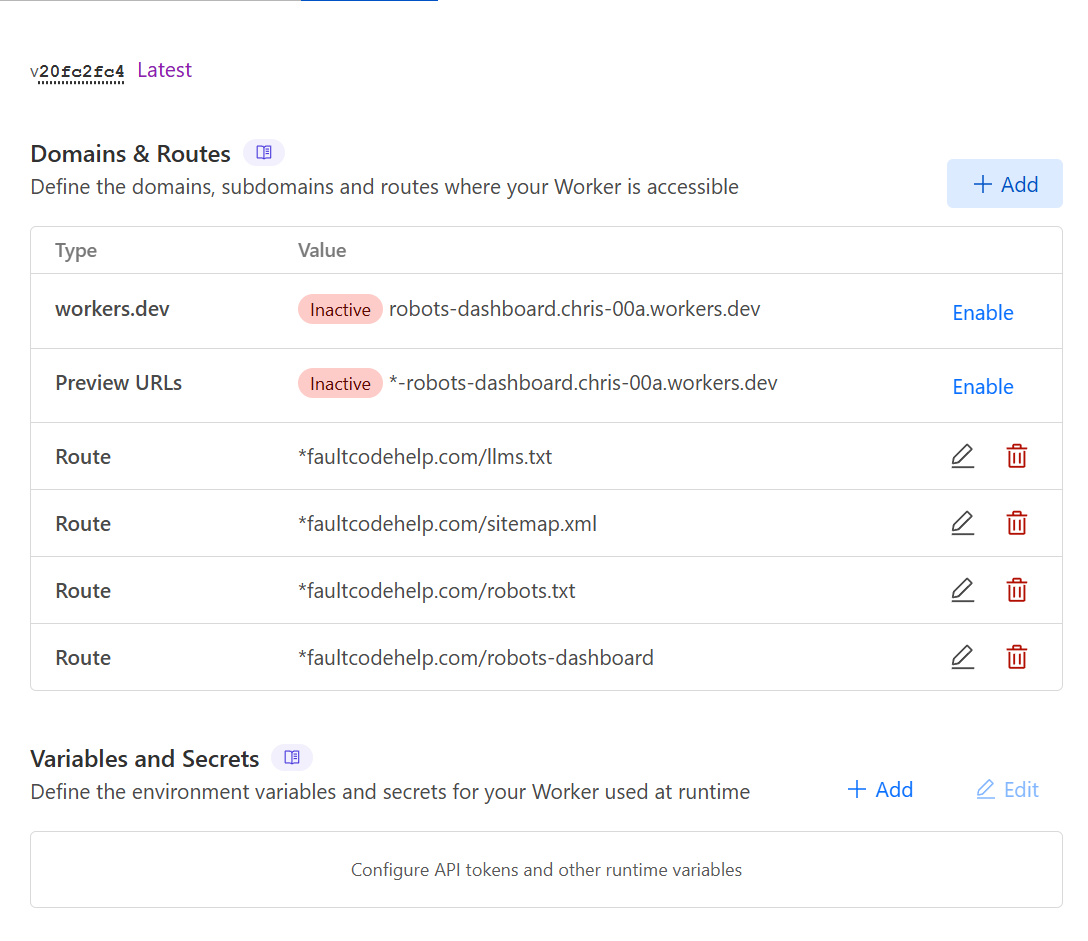

Use the same process and Zone and Fail open settings to set up three more routes. With the following in Route (replacing domain.com with your own)

*domain.com/robots.txt

*domain.com/sitemap.xml

*domain.com/llms.txt

If everything's good it should look like this:

Congratulations, your Masters Degree Certificate in setting things up on Cloudflare is in the post. Just kidding, it wasn't that hard but I do wish Cloudflare would do some sort of one click configuration system for less technical folks.

There is no time for a tea break. Open your site with /robots-dashboard appended to domain. Like this:

And choose a password to secure the analytics. Then only you can get in. The setup is now complete and you can optionally have your tea break now if you want to.

If not you can log in with your password and check your statistics. Quite probably you won't have any yet. You can visit /robots.txt if you want to force an entry in to check it is working. Or you can just wait for a real one.

You're all set up. Optionally you can now thank Cloudflare for the infrastructure to do this. Compulsorily you must check out The Crawl Tool, the best and most innovative SEO tool on the planet that lets you track more than just robots.txt and super powers your site.

Start with a free crawl of up to 1,000 URLs and get actionable insights today.

Try The Crawl Tool Free

Why experiment with Googlebot Beyond the fact it is interesting to understand how it works, it is potential useful if you ca...

LLMS.TXT again I've written about LLMS.TXT in the article about how getting one listed in an llms.txt directory mysteriously...

What's this about Adding Other Media to robots.txt I recently came across John Mueller's (a Google Search advocate) blog. I ...

Understanding the Importance of having a fast Mobile website I, personally, spend a lot of time focusing on site speed. The ...

What are robots.txt, sitemap.xml, and llms.txt These files are used by search engines and bots to discover content and to le...

AI Crawlers and Citing Sources The rise of AI, rather than search, crawlers visiting websites and "indexing" information is ...