Some Experiments into How Google's Crawler works

Why experiment with Googlebot Beyond the fact it is interesting to understand how it works, it is potential useful if you ca...

Expert insights, guides, and tips to improve your website's SEO performance

At just 85MB the AI text detection model we created really is tiny, but before we get into that we need to address the context and the why. In the last few years, Large Language Models have become really good at generating human-like text. For web professionals and those interested in the web sites, that has led to several obvious effects.

Around these things a number of myths have also appeared.

AI models take a lot of resources, and therefore money, to run. The tools to detect AI generated text generally use costly methods that mean you can use their tools for free for a small amount, but then they want ... they need ... you to pay. A more silent revolution in the world of AI is that small models can now be run in a web browser. A really small AI text detection model can therefore be run at very low cost, enabling it to be provided for free for as many uses as someone wants.

Building an AI model is a process that has been getting easier. But it's still overly complicated, especially if it's an area you're less familiar with. So I used Claude 3.5 in Cursor AI to give me some assistance. This is an experience where it constantly gets it wrong, but if you know just enough to keep trying to prompt it in the right direction then you eventually get there. I'm not usually a fan of AI coding because of the amount of errors and corrections you need to do, which normally take more time than actually coding it yourself. But here, where it would otherwise take weeks to learn about the topic, it worked effectively. It is weird though that an AI Large Language Model (LLM) was helping me create an AI to detect AI LLM text!

The Hugging Face website has some datasets we can choose from, making the process a bit simpler. I chose MAGE. I'd also then effectively decided to train it only for English, which I think was a reasonable compromise for such a small model. Before I knew it, my GPU was on a 10 hour training run. This is the first learning lesson - for a small, simple, model it really doesn't take that long to train them. I just left it overnight.

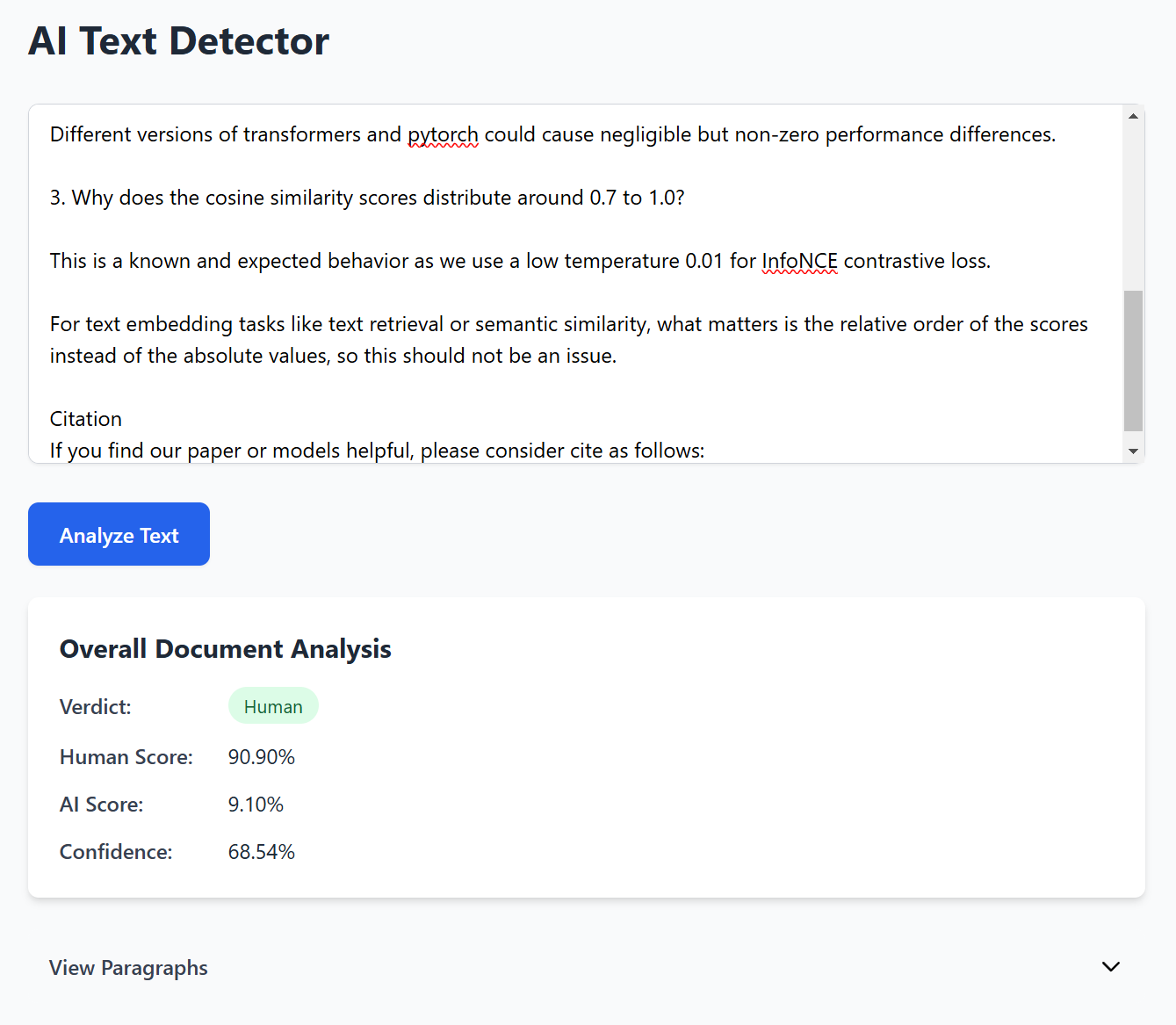

Following waking up to a newly built AI model, it's time to test it. Against samples in the MAGE dataset that it hadn't seen, it scored just under 90% accuracy. With text stripped from an AI text dataset and an equivalent human text dataset it scored an accuracy of 83.64%.

Here is our first "myths" learning point. Tools to test for AI Generated Text will often quote this figure. Indeed, scientific papers often do this. But in a real world context that's probably not task suited. If you're using them to find paragraphs of text that somebody may have created with AI, for example if you're a teacher checking a student's essay, then what is probably important to you is how often it misclassifies Human text as AI. The same is probably true if you're analyzing a piece of text paragraph by paragraph.

When my tiny model makes an error in classifying something as AI or human text then it has a strong tendency to misclassify human text as AI text, rather than the other way around.

If other models have the same tendency, which seems logical, than paragraph by paragraph analysis just doesn't make much sense. Because of the way the AI works we do need to feed in text in chunks and using paragraphs makes sense. Then we can calculate a score across the whole text. But what I'm saying is the model, and probably such models in general, is way better at generating an opinion on longer texts than it is at a paragraph or shorter level.

Following these automated tests, I did some manual testing. This is much more limited but I took some known AI generated articles from web pages and some known non-AI ones and tested them. This is, by nature, a much more limited form of testing but it seemed to perform pretty well for standard website articles. One interesting observation is that it classified virtually all news articles I tried as AI, even when I could be sure they weren't. This suggests the patterns between LLM generated text and news sites text are more similar and therefore more nuanced than a small model can handle. It would probably take a larger model and some specific training to handle this. It also suggests where a lot of the training of LLMs may have come from!

An advantage of the previous tests is they were on my machine and I could use the GPU, so they were quick to do. Because it's such a small model, it runs really fast on the GPU. One common myth I read is that search engines can't detect AI content. This is based off the idea that it would simply take too much computer resources to run scans across so many pages.

Whilst we don't know if they're doing it in practice, the ability to create such a small model puts that idea in serious question. Assume you were a search engine, you could use a small model like this to test a fairly large amount of web pages. Whilst it may not be the most accurate model in the world, it has enough accuracy to give an indication that a page might be AI. Following this you could take a second, larger and more detailed model, and test just the pages that test positive. This would significantly reduce the amount of resources you would need to expend.

Regardless of whether they're doing it at the moment or not, building the tiny AI has shown that the potential to detect AI content is not just a theory but something that would highly likely be currently implementable. You should not expect AI content to perform well in search engines in anything but the very short term.

Whilst search engines would want to run a tiny AI model as fast as possible, we want to run it as availably and at as lower cost as possible. To do this I used some new web technologies and traded in "time". The most important of these was ONNX runtime, but transformers.js is also a worthy mention. I spent some time with Claude 3.5 working out how to turn the model into an onnx format model and also how to make it a bit smaller. Then implementing it into the web interface that I've put here.

This allowed me to more quickly texts. Notably it is slower because it is now using the browser and the cpu, rather than the GPU. But it came with a nicer interface and a cost to run of practically zero. So I spent some more time testing articles.

Originally it listed every paragraph and the results, but for the reasons given earlier I didn't like that so much and wanted the focus to be on the Overall Document Analysis. I didn't want to get rid of it completely though, so collapsed it under "View Paragraphs".

Feel free to play with the live AI Text Detection Tool to see how it works. You can use it as much as you like, no limits.

The speed was not great, so I worked on making it work with web workers. This basically just means that we can run multiple threads in the background and therefore increase the speed at which we get a result.

However - all this proved to be too much for mobile phones. Firstly a library bug meant I had to use an older version of a library, and secondly the web workers weren't playing well. Ultimately I decided to make a second, simplified, interface for mobile. This is slower, it works and I think the tradeoff is worth it as this is really a tool where most people will copy and paste documents on a desktop computer.

If you look on the internet you will see no shortage of tools that claim to "Humanize" AI text. Even some of The Crawl Tool's competitors have them. To understand these we first need to understand that the AI model that detects AI text content is just looking for patterns and indicators in the text it is given in order to try to classify it as human or AI.

If you have ever used one of these "Humanizer" tools, you might have been surprised to see how often they create text that doesn't seem human at all. This is because they don't make things human, they simply try to obfuscate the patterns and indicators in the text in a way that doesn't get detected by AI text detection models.

The problem is that it is a program doing this, not a human. As it's a program it will put its own patterns and indicators in the text. It's just that no detector available to the public is looking for those particular patterns as it has never been trained on them.

Content from these humanizers doesn't read any more "human", often less so. So there's only one reason for using them - to trick search engines. The issue here, of course, is that if it is a successful strategy and these techniques were used then it is somewhat trivial to train models also on that "humanizer" output. A simple model can be done in a day! That it passes known ai text content detection tools now, doesn't mean it passes non public ones companies may own, and it doesn't mean it will forever. It's not "humanized" and at best it's short term content waiting to be wiped out in the future.

Traditional AI text detection tools take lots of resources to run, making them costly. Whilst they have free plans, they eventually need to be charging you to access. I'm not sure those costs are inline with the amount of utility they are providing. Whilst it is possible to get a fairly accurate overall score for an article, a lot of the other features (especially at a more granular level in terms of the text) are essentially worthless. This tiny model is virtually cost free to run because it runs in the web browser, though its size means it is likely less accurate than commercial models, doesn't do languages other than english (which other models may) and underperforms in some cases (e.g. news). This makes it's main benefits:

I like the idea I've mentioned a few times in this article of using a multi model approach, where the smaller model determines if analysis goes on at a deeper level. For that reason, I might consider making a larger model.

There are a couple of stages that we might be able to integrate this into The Crawl Tool. But this isn't without some hurdles to be solved before that's possible. So I'll be looking into the how and the what the crawl tool can do with this.

Once again, check it out here!

Start with a free crawl of up to 1,000 URLs and get actionable insights today.

Try The Crawl Tool Free

Why experiment with Googlebot Beyond the fact it is interesting to understand how it works, it is potential useful if you ca...

LLMS.TXT again I've written about LLMS.TXT in the article about how getting one listed in an llms.txt directory mysteriously...

What's this about Adding Other Media to robots.txt I recently came across John Mueller's (a Google Search advocate) blog. I ...

Understanding the Importance of having a fast Mobile website I, personally, spend a lot of time focusing on site speed. The ...

What are robots.txt, sitemap.xml, and llms.txt These files are used by search engines and bots to discover content and to le...

AI Crawlers and Citing Sources The rise of AI, rather than search, crawlers visiting websites and "indexing" information is ...